For this use case we will have a two part blog post. The goal will be to showcase how we can use the machine learning module from Critical Manufacturing to predict material defects.

Overview#

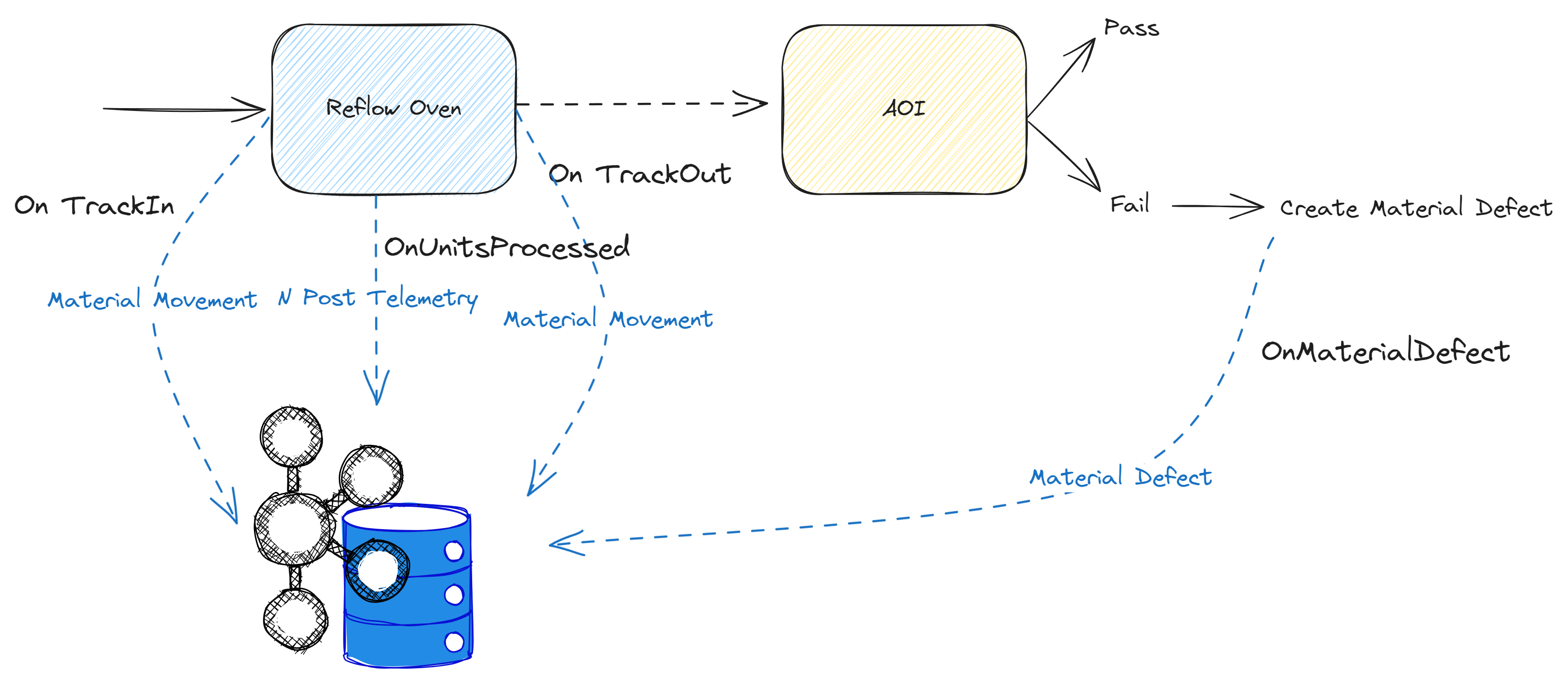

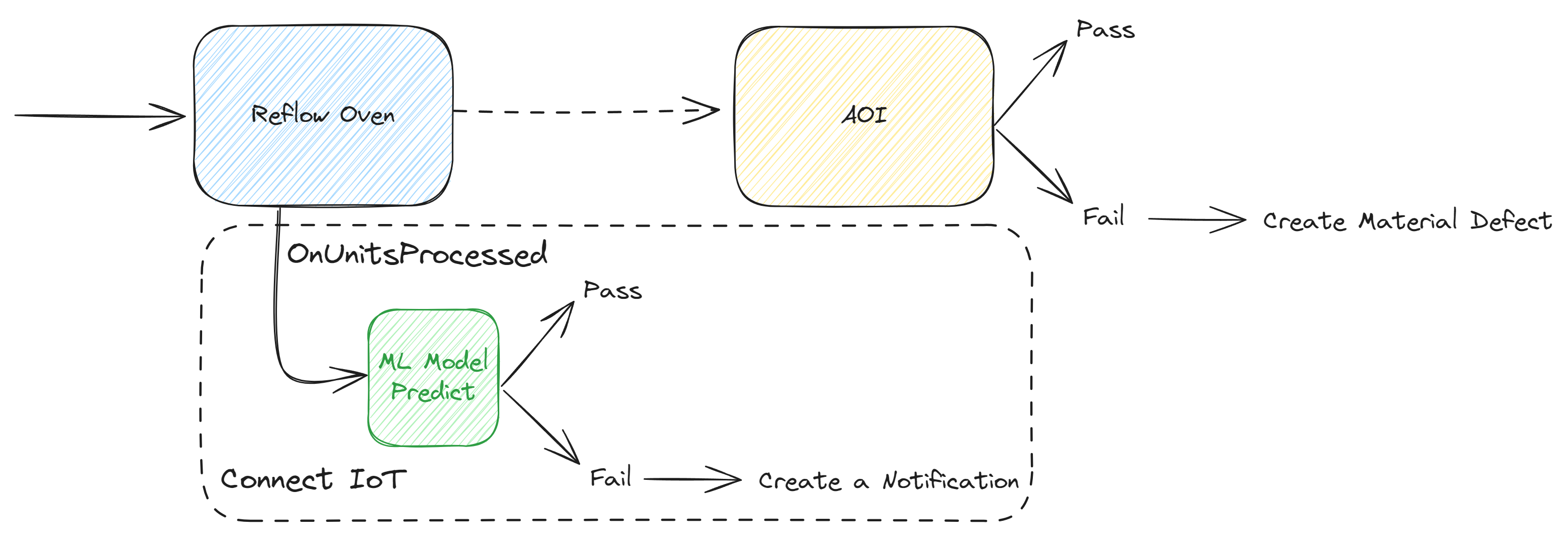

The full use case will be to train a machine learning module with data from a machine and correlate it with an MES material defect that happens in an inspection machine.

We want to be able to predict defects and notify the employees that something was wrong.

The first part of this use case will cover:

- Ingesting Data (Equipment Integration)

- Understanding Data (Canonical Data Model)

- Combining System Data with Machine Data

- Creating a Dataset

Ingesting Data#

The first challenge is being able to collect structured and contextualized data from our shopfloor.

Creating Information#

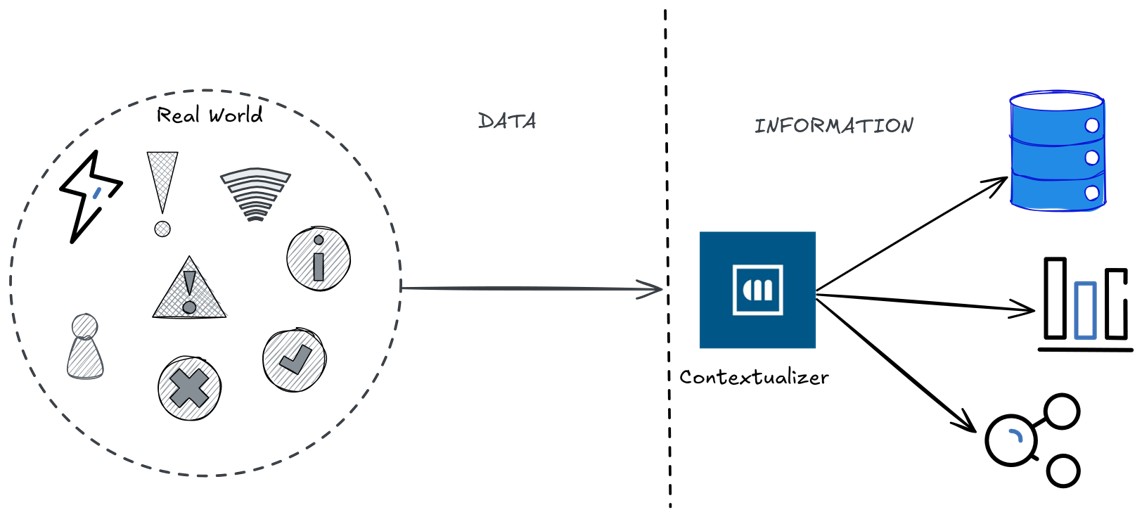

The shopfloor is a data rich environment. From alarms, operator actions, quality reports, to machine interfaces and machine logging. There is a lot of data being generated by the day to day operations of a production environment.

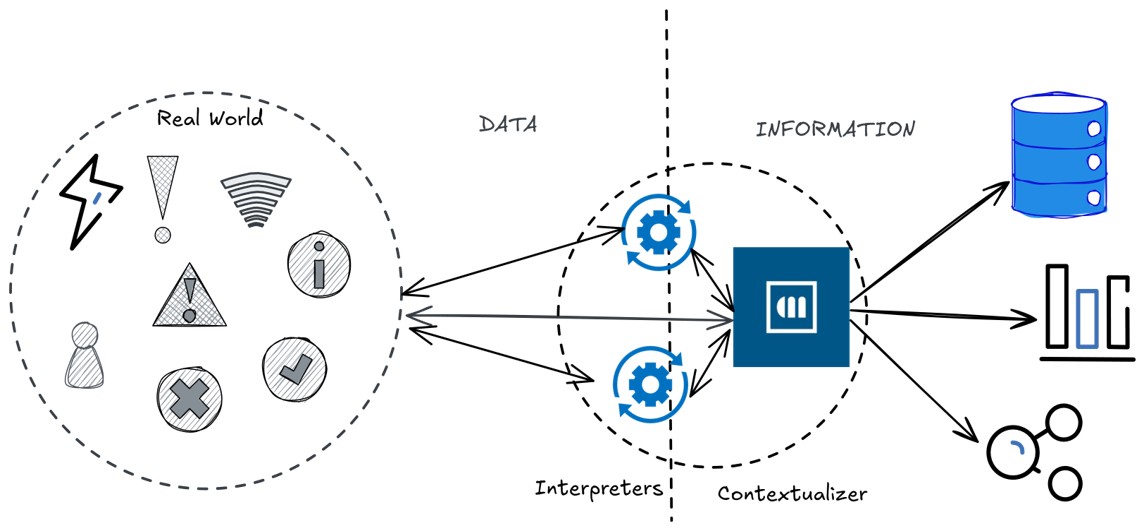

The biggest challenge is not just to collect that information, but to collect it in a way that is able to embed enough context to the data, so as to be usable. The Manufacturing Execution System has a key role in being the contextualizer of the data. It will infuse the data with all the context of the shopfloor. It will be able to give key information like where the data should be set in the ISA95 structure. Is it data from a Resource, Area, Site, Facility and other important aspects that will allow the data to become information.

The Critical Manufacturing MES provides an integration application that will be able to bridge the gap between the MES and the shopfloor data producers. These interpreters will handle all the interfacing with the outside world and then will feed the data into the MES system.

Reality is messy. We need applications that are able to be near the real world and are able to translate reality and then we have a common system that is able to embed context to that reality.

Equipment Integration - Overview#

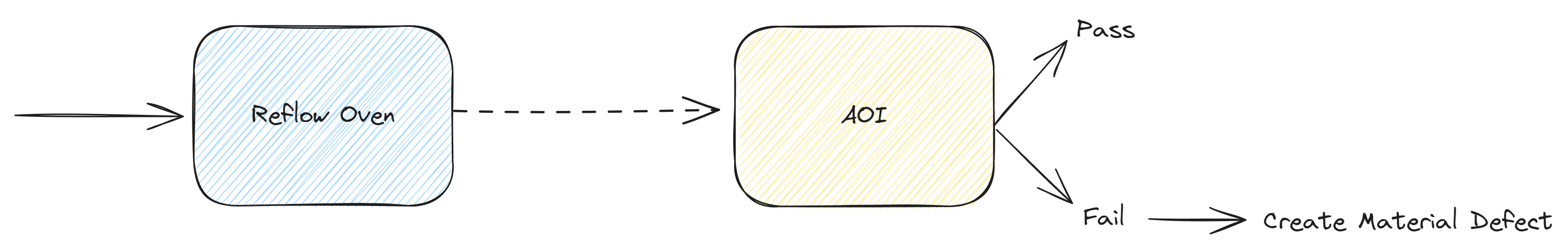

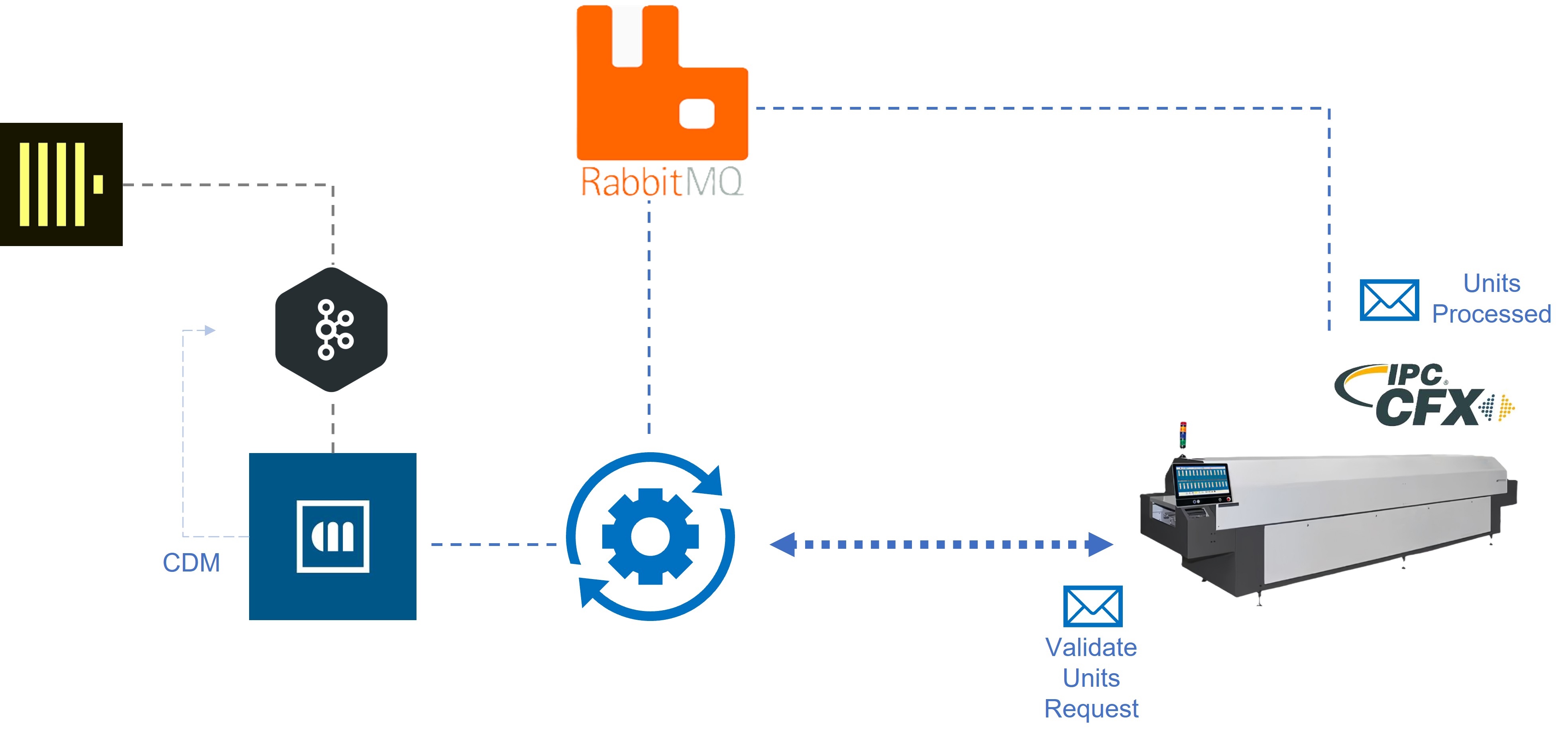

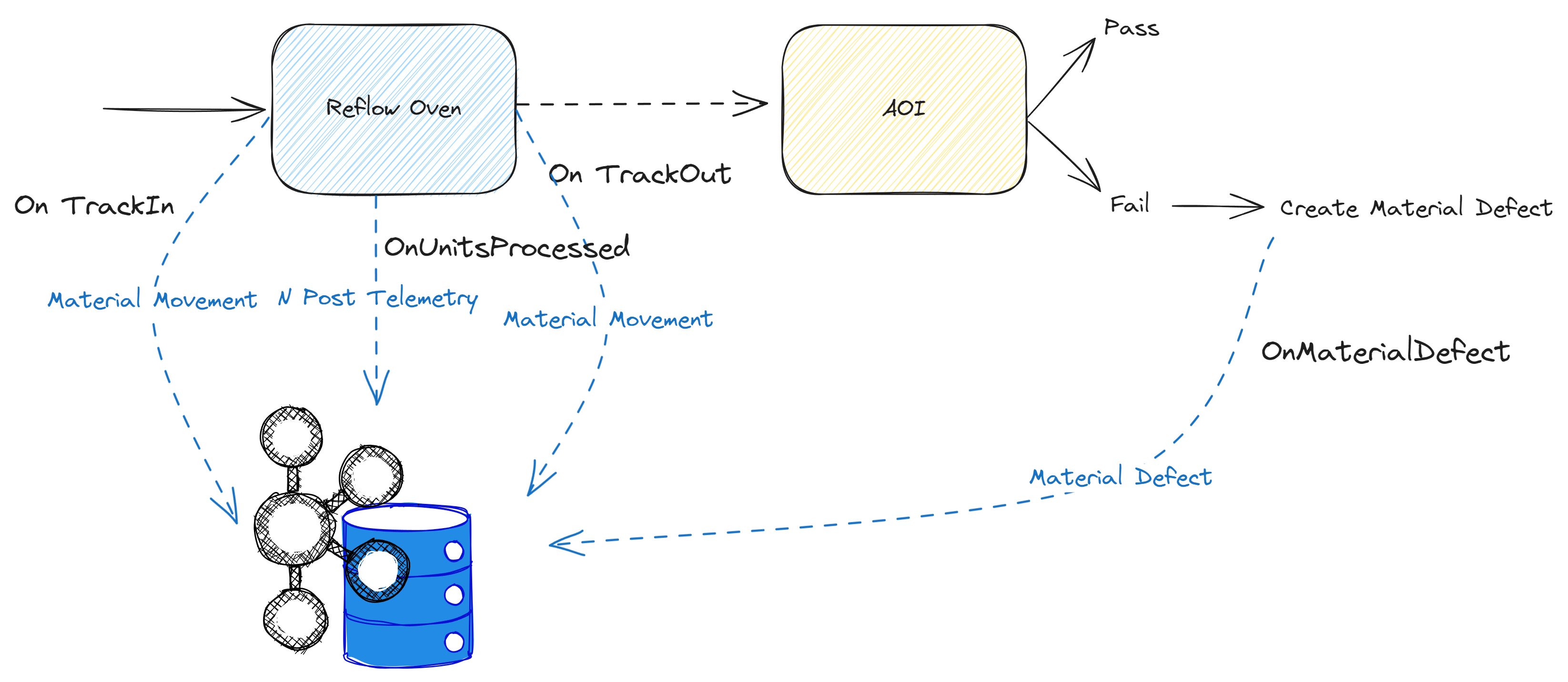

We will use Critical Manufacturing Connect IoT module to connect to our equipment. For our use case we will use an SMT Reflow Oven.

SMT or surface mount technologies is a very interesting use case in the equipment integration world as it uses a strong typed AMQP based open standard IPC-CFX. It allows for both brokered communication and peer to peer communication for control actions.

For our use case we will leverage the brokered communication to ingest IPC-CFX events generated throughout the Reflow Oven production lifecycle. The protocol itself already defines what are the events that highlight the process stage.

For this use case we will use the Work Started event to signal the MES to change the material to be in process, Units Processed an event at the end of an oven run that reports the state of the oven throughout the material process cycle and finally the Work Completed which will change the material to be processed and signal that it has finished the Reflow Oven process.

Equipment Integration - Work Started / Work Completed CFX#

For all the MES the start and end process are key aspects of any integration.

They allow for the process to be tracked from beginning to end.

The IPC-CFX foundation provides an in depth documentation for each of these events.

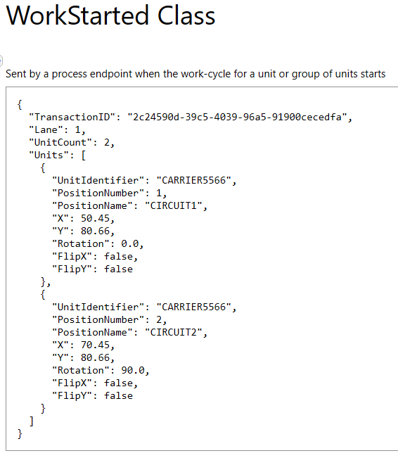

For Work Started:

In this event we will have a TransactionID that will follow all the events emitted in the lifecycle of the material. It will also inform as to what is the material that is starting the process and other relevant information about the material.

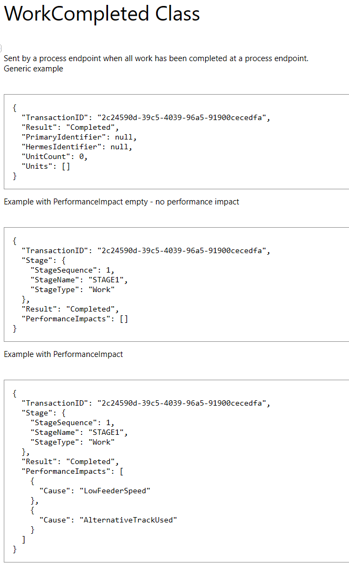

For Work Completed:

The Work Complete will provide a process result and signal the end of the process.

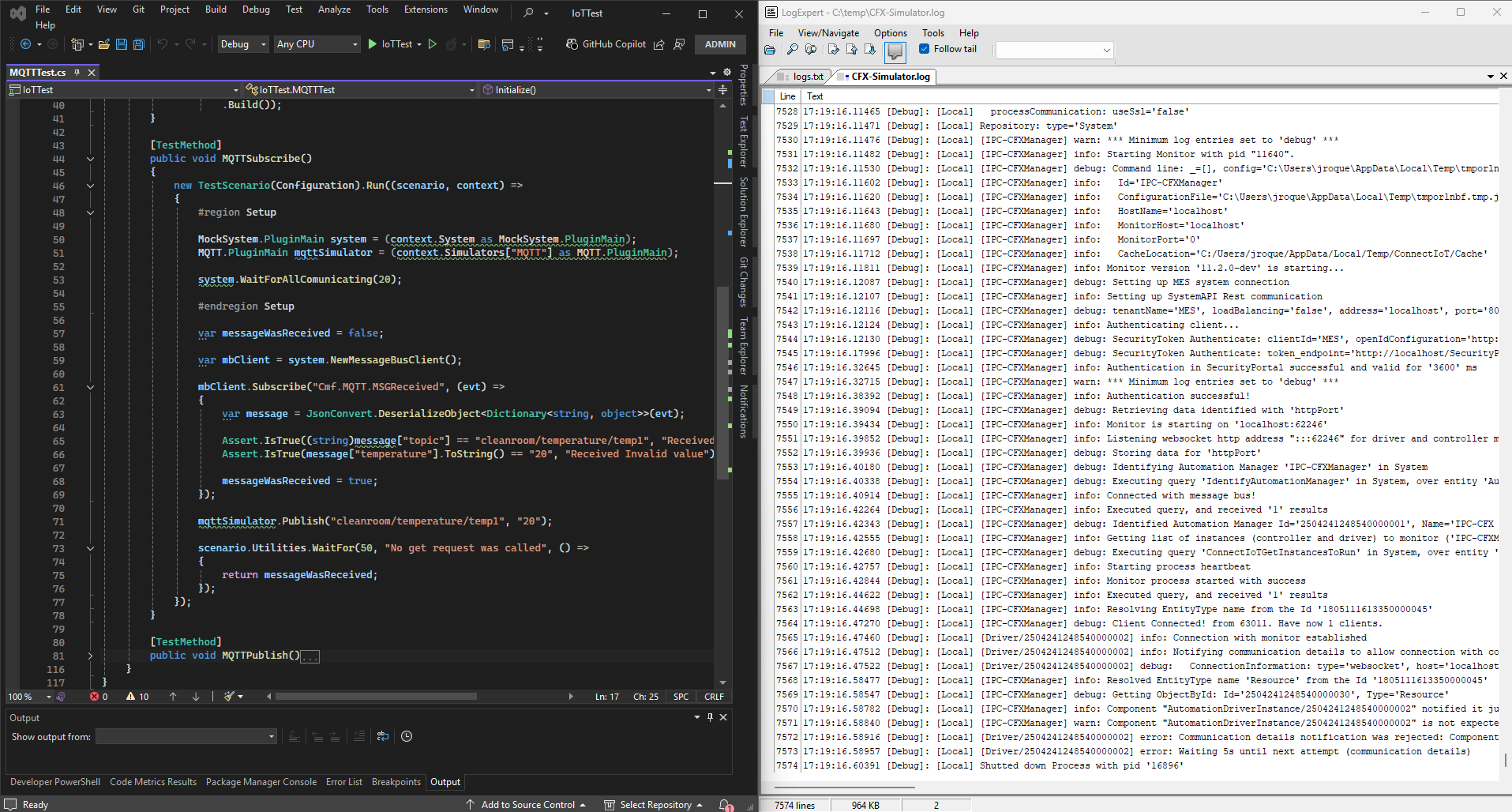

Equipment Integration - Work Started / Work Completed Connect IoT#

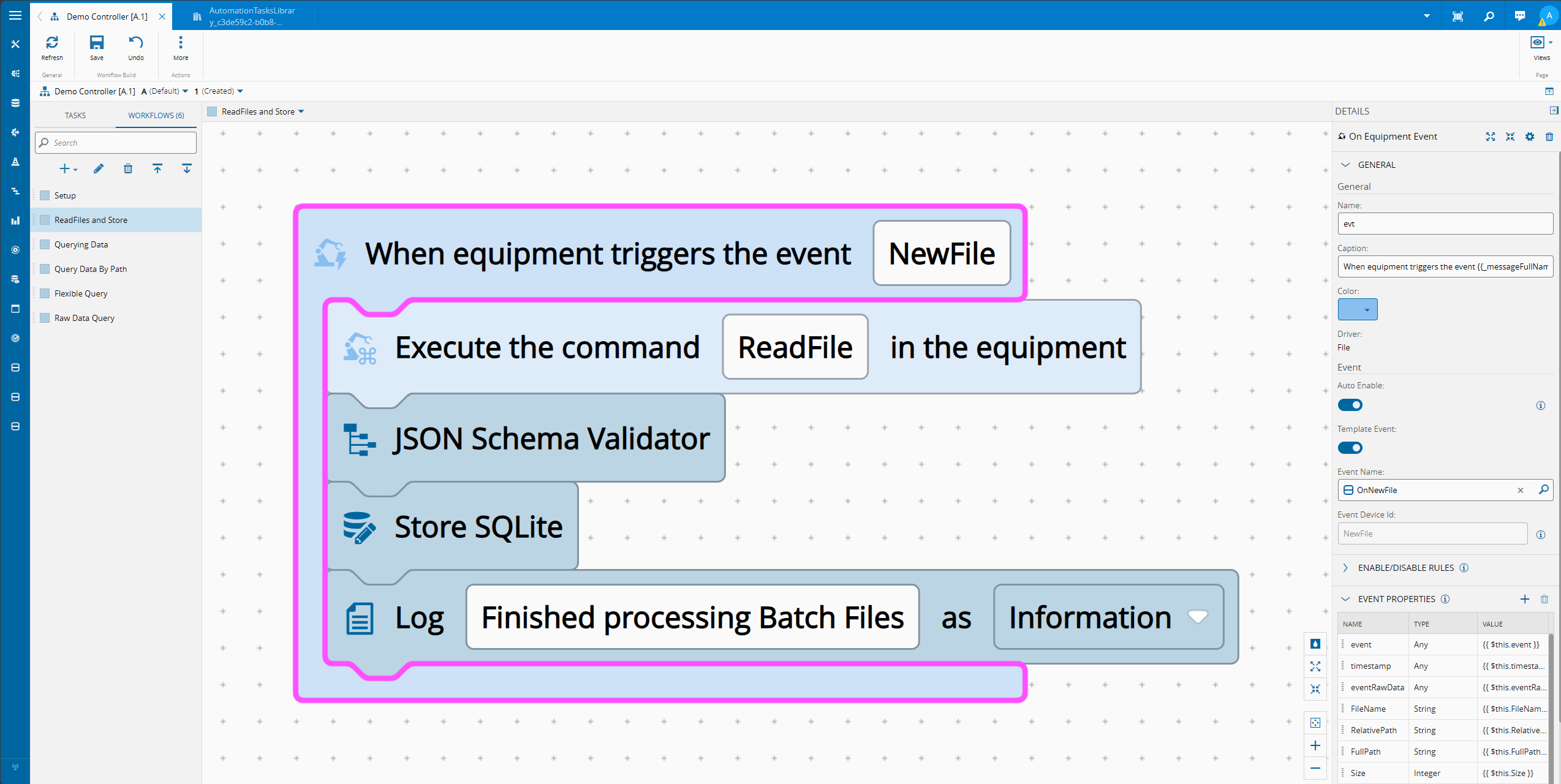

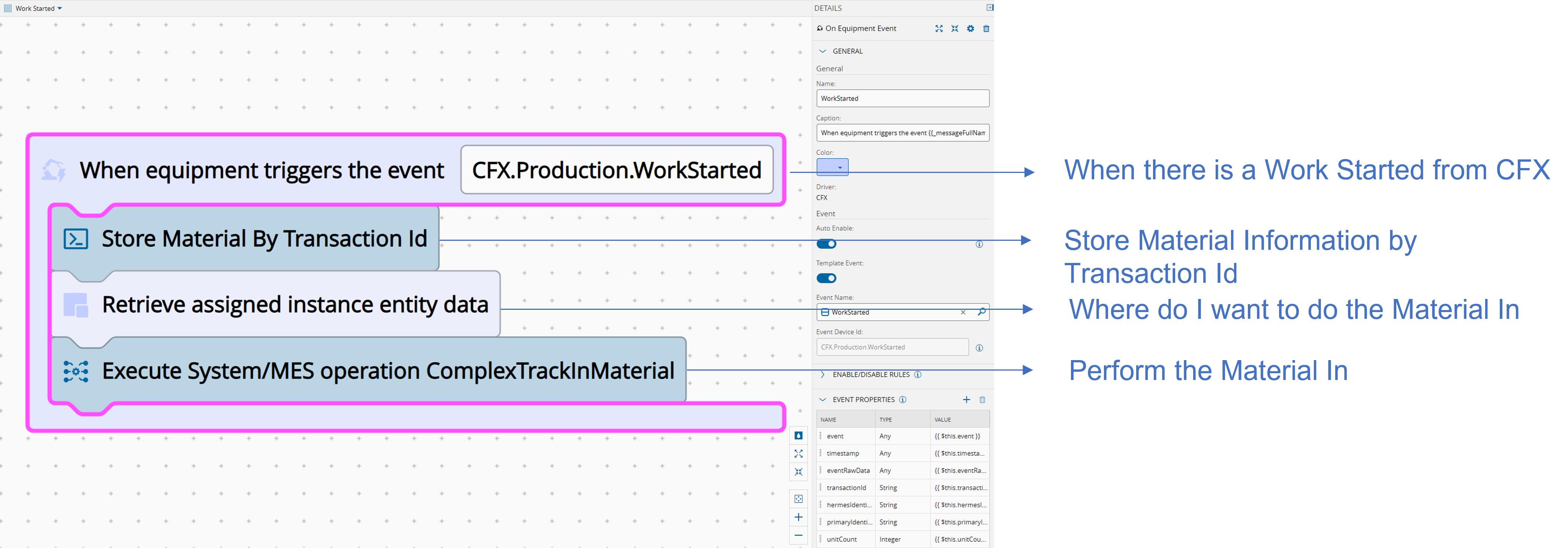

For Connect IoT this is a very simple integration.

For Work Started we will have an Equipment Event task, we will store the transaction id context information, retrieve the Resource linked with this integration and call the MES service ComplexTrackInMaterial.

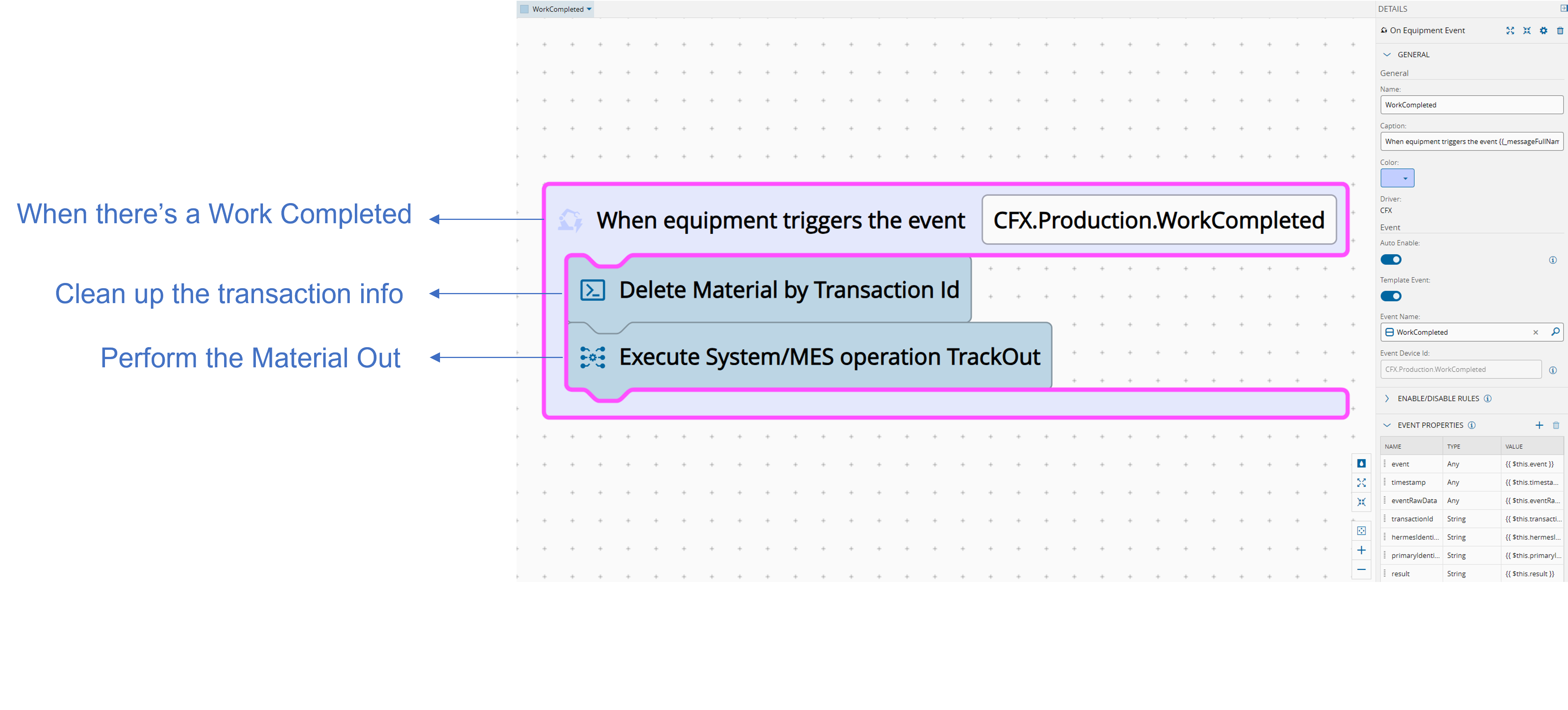

For Work Completed it is very similar we just do some housekeeping by cleaning the transaction information and calling the MES service TrackOuT.

As you can see with Connect IoT the user has a much simpler and ready to use interface to the protocol.

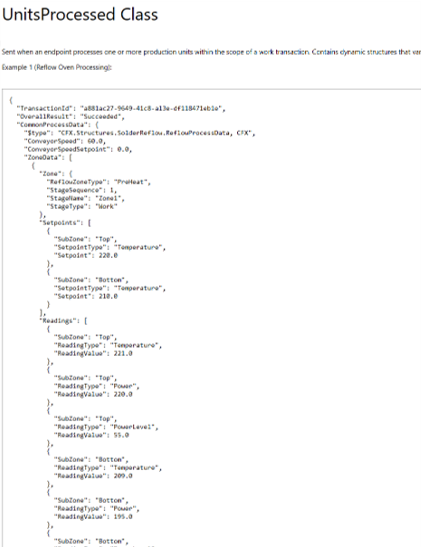

Equipment Integration - Units Processed CFX#

The Units Processed event is the event that will provide all the context information from the material process that we want to store. We will store that information using CM IoT Data Platform. The Data Platform is a platform for large scale data ingestion.

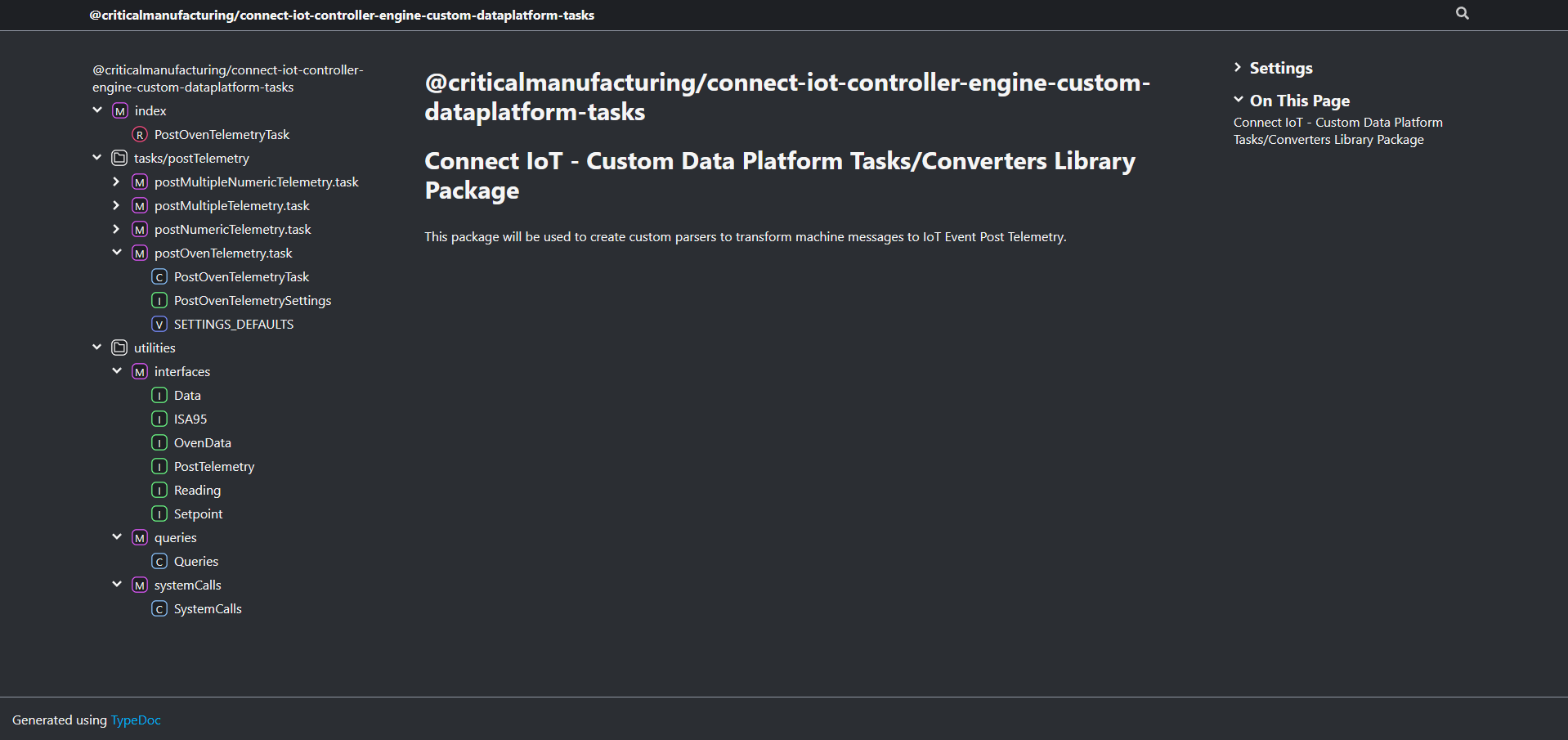

Out of the box for equipment integration, CM already provides two IoT event definitions tailored for machine integration: PostTelemetry and PostMeasurement.

PostTelemetry: Used for transmitting time-series and continuous data such as temperature, pressure, and operational status.PostMeasurement: Used for transmitting data from tests, metrology processes, and inspections.

You can create your own IoT Event Definitions as well.

For our example, we will parse the data provided by the Units Processed event and Post it as a set of telemetry data.

For Units Processed:

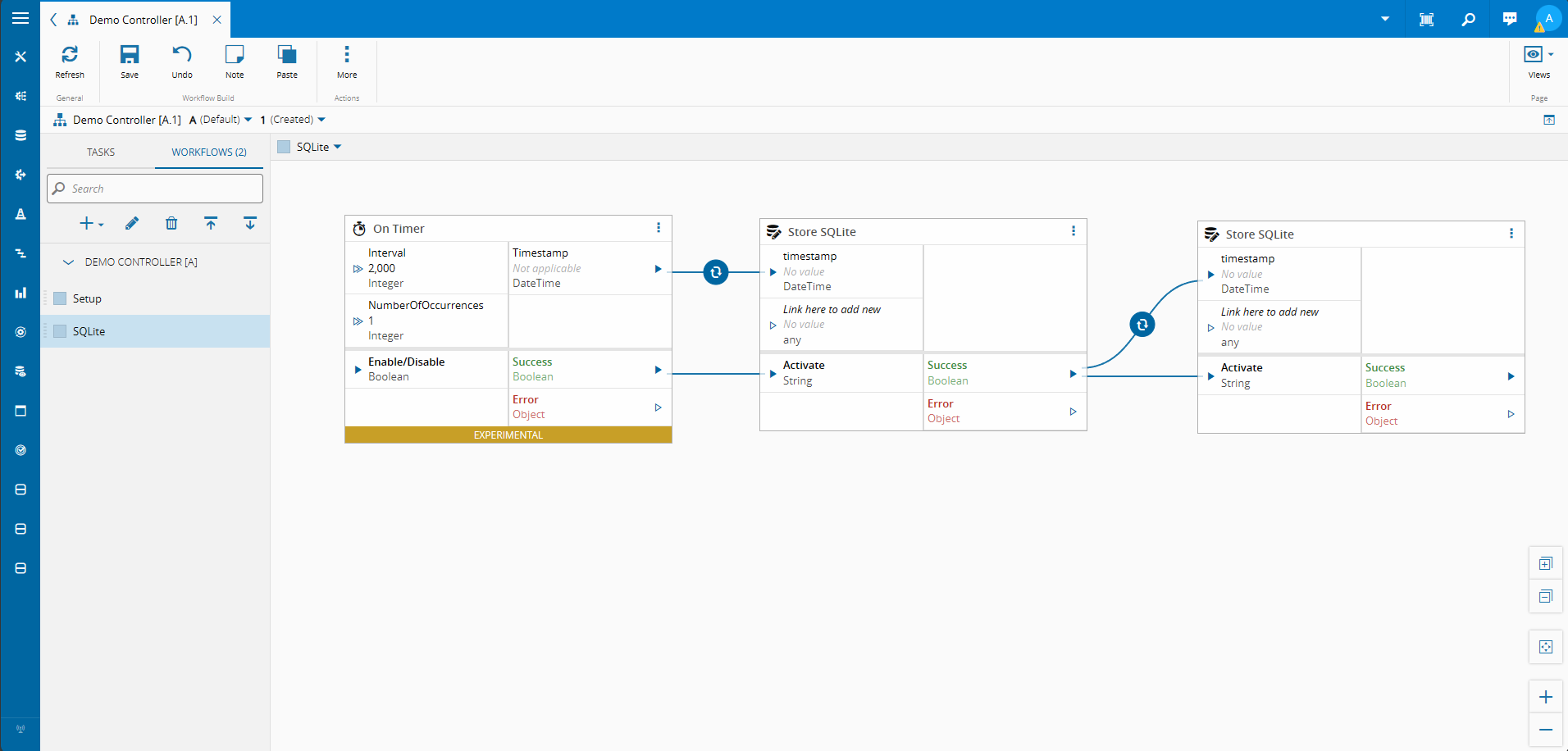

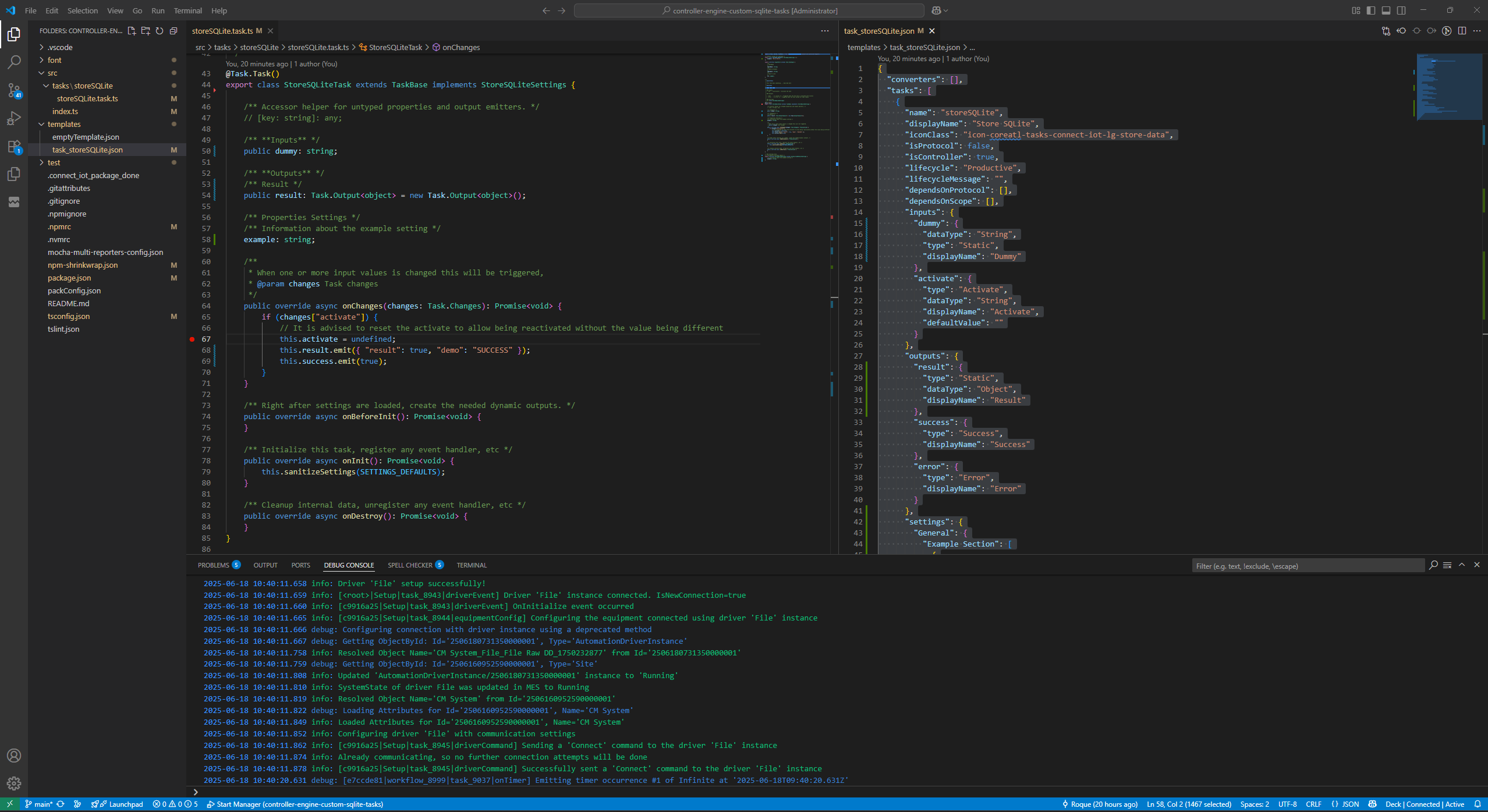

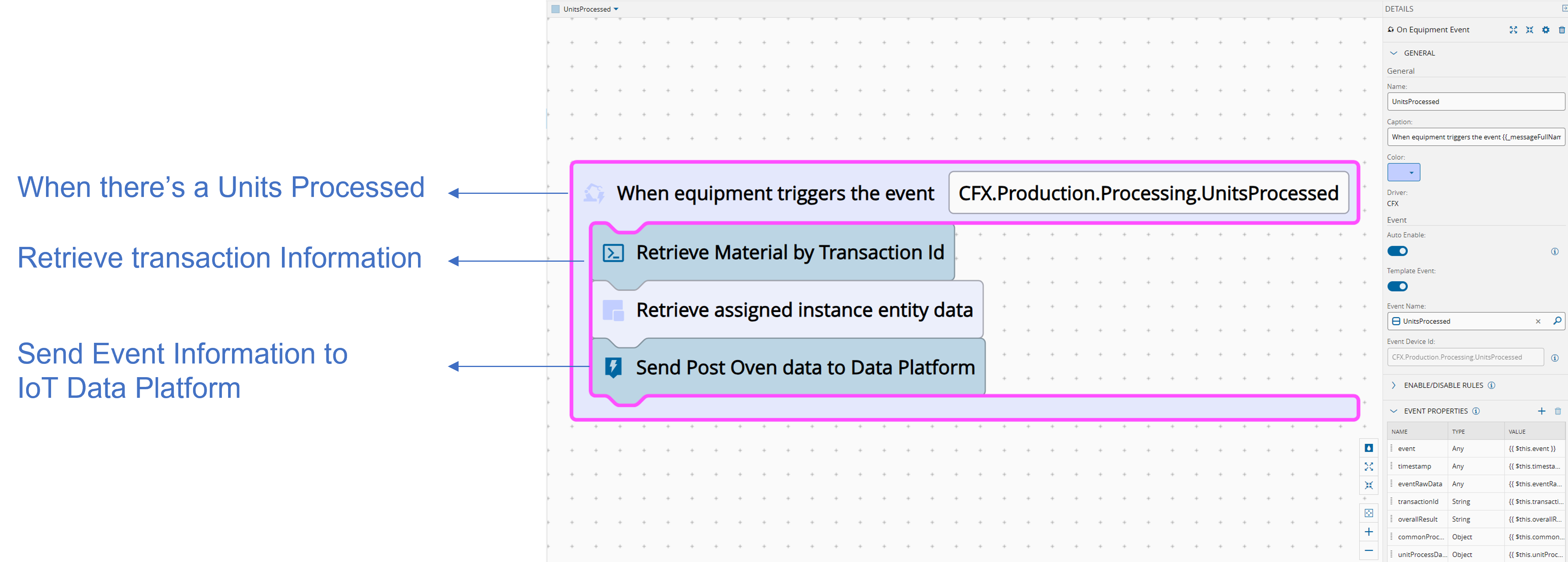

Equipment Integration - Units Processed Connect IoT#

In Connect IoT we will have a hook for this event, retrieve some context information and call a Post Oven task. The system by default provides a Post Event task, for my use case it was useful to create my custom version where I do all the message parsing and posting.

Understanding Data#

With this setup we are able to run and perform material tracking and post telemetry events, but what about the rest of the MES system.

By design the MES system is a transactional system. It guarantees that all the actions are successful before committing. This makes a system highly robust and consistent, but by nature of the design, it is slower than event based strategies as it has to wait for all actions before committing.

Event Based MES#

In CM MES we have the best of both worlds. We have both the strict transactionality of what a real time control system must have, but we also have events being generated. Everything that happens in the shopfloor are a set of events that are occurring. From changes to a material Material Movements, to changes to a particular resource ResourceStateChange, actions in the MES will trigger a light weight contextualized event. These events can then be consumed, aggregated and turned into any kind of shopfloor analytics.

The CDM, canonical data model, are a set of events that are being generated automatically from the MES. They are canonical in the sense that they are a known gold standard of all the data and metadata context that an event generates at each MES action (API Definition). These events already provide some enrichment to provide a more complete snapshot. They also come with context of the current and previous event, this allows easy historicity comparison, tracing and comparison. The events are then stored in a ClickHouse database. For more information on what are the strengths of ClickHouse you can read here.

All of this allows us to simplify and make analytics a much faster endeavour as it mitigates the need for the user to have a deep understanding of the data model and perform table correlation for data gathering, as the relevant data has already been infused into the event.

The user can also generate his own events and then create his own hooks to populate those events. He can also run Low Code logic, whenever an event occurs. This can be helpful to perform data enrichment, data replication or forwarding or event to trigger some MES logic.

The best presentation done on this whole topic can be found here:

Combining System Data with Machine Data#

In this scenario we are posting machine data through the Connect IoT integration and also generating the CDM events simply by performing the MES material tracking and recording a defect.

Our goal is then to create a dataset for the Machine Learning Model to be able to train. We will want to cross reference the machine data against the material defects created.

Post Telemetry#

Our machine data is being posted as a telemetry event. The telemetry event is oriented as to have each post as a measurement of a sensor. Our Reflow Oven is a bit more complex. It is split in different zones and each zone has top/bottom/whole zone. It also is able to capture temperatures and other telemetry data.

For the Machine Learning model training dataset we want to have columns with unique context and with results. So we will aggregate the data into a structure that will make sense for our model.

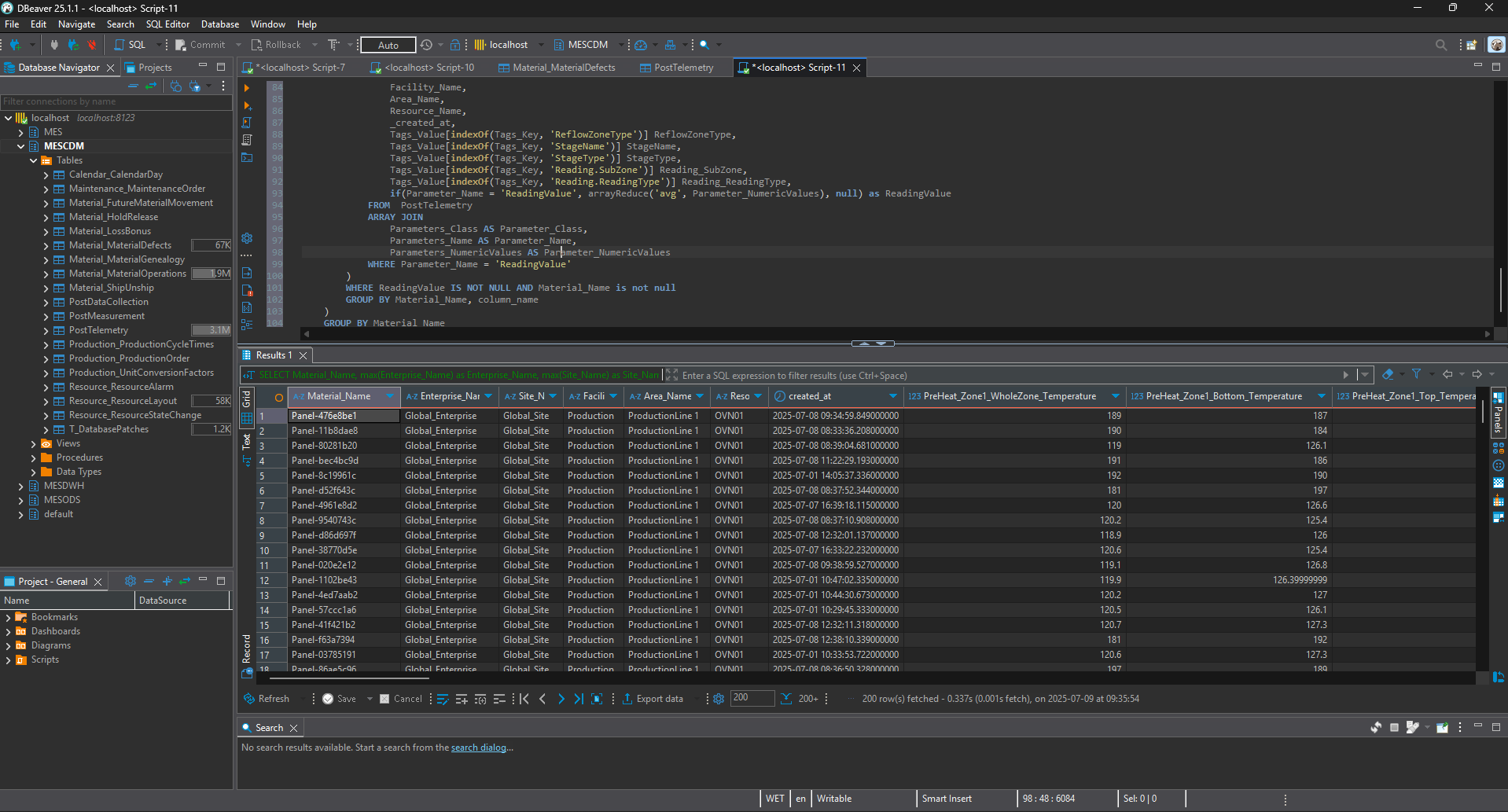

-- Pivot Columns so as to have row per material with colum value structure

SELECT

Material_Name,

max(Enterprise_Name) as Enterprise_Name,

max(Site_Name) as Site_Name,

max(Facility_Name) as Facility_Name,

max(Area_Name) as Area_Name,

max(Resource_Name) as Resource_Name,

max(_created_at) as created_at,

maxIf(value, column_name = 'PreHeat_Zone1_WholeZone_Temperature') AS PreHeat_Zone1_WholeZone_Temperature,

(...)

FROM (

-- Group By Material and Measurement Column Name Post Telemetry Reading Values

SELECT

Material_Name,

max(Enterprise_Name) as Enterprise_Name,

max(Site_Name) as Site_Name,

max(Facility_Name) as Facility_Name,

max(Area_Name) as Area_Name,

max(Resource_Name) as Resource_Name,

max(_created_at) as _created_at,

concat(ReflowZoneType, '_', StageName, '_', Reading_SubZone, '_', Reading_ReadingType) as column_name,

max(ReadingValue) as value

FROM (

-- Aggregate Post Telemetry Reading Values

SELECT

Material_Name,

Enterprise_Name,

Site_Name,

Facility_Name,

Area_Name,

Resource_Name,

_created_at,

Tags_Value[indexOf(Tags_Key, 'ReflowZoneType')] ReflowZoneType,

Tags_Value[indexOf(Tags_Key, 'StageName')] StageName,

Tags_Value[indexOf(Tags_Key, 'StageType')] StageType,

Tags_Value[indexOf(Tags_Key, 'Reading.SubZone')] Reading_SubZone,

Tags_Value[indexOf(Tags_Key, 'Reading.ReadingType')] Reading_ReadingType,

if(Parameter_Name = 'ReadingValue', arrayReduce('avg', Parameter_NumericValues), null) as ReadingValue

FROM PostTelemetry

ARRAY JOIN

Parameters_Class AS Parameter_Class,

Parameters_Name AS Parameter_Name,

Parameters_NumericValues AS Parameter_NumericValues

WHERE Parameter_Name = 'ReadingValue'

)

WHERE ReadingValue IS NOT NULL AND Material_Name is not null

GROUP BY Material_Name, column_name

)

GROUP BY Material_Name

Now we have a row per material and the row has a column for each zone with the collected temperature value.

Material Defect#

We can now cross the post telemetry data with our material defects.

Select

if(Defect_Reason is null or Defect_Reason = '', false, true) as Classification,

Material_Name,

created_at,

PreHeat_Zone1_WholeZone_Temperature,

PreHeat_Zone1_Bottom_Temperature,

PreHeat_Zone1_Top_Temperature,

(...)

Enterprise_Name,

Site_Name,

Facility_Name,

Area_Name,

Resource_Name

from (

(...)

)

as Post

LEFT JOIN

Material_MaterialDefects as Defs ON Post.Material_Name = Defs.Material_Name AND Defs.Header_Operation = 'Create'

Now we have a query that is able to return all the machine data aggregated by material name and pivoted in context columns.

Creating a Dataset#

In the MES, we can now create a dataset with this information.

This dataset can now be used for reporting our dashboard creation, but fundamentally for us, it can be used to train our Machine Learning Model.

Final Thoughts#

For this first post our goal was data ingestion and processing. We have collected data with Connect IoT and we have leveraged data that is generated by the MES to understand the relation between machine data. In the next post we will focus on creating our machine learning model and seeing it make predictions.